Below I have written down those of my ideas that I am pleased with, but which I have chosen not to pursue further. Feel free share your thoughts on the concepts presented here, or utilize them if you are inclined to do so.

Click the titles to see the full description for each concept.

Improving skinning to achieve one-size-fits-all avatars

The most common VR avatars are humanoid/biped 3D models that come in all shapes and sizes, as do VR users. For maximizing the virtual body ownership illusion, the avatar should be scaled to fit the proportions of the user.

Here I propose a simple addition to existing game engine skinning systems, which allows arbitrary VR avatars’ virtual body proportions to be automatically matched with the body proportions of any user (both body segments’ length and width). This addition also increases real-time avatar modification possibilities without the need for blendshapes.

Scaling with one scalar to make the avatar and user height match is not sufficient, because body segment (e.g. forearm, upper arm) proportions vary between different avatars and users. For achieving natural virtual body ownership, the goal is to have the avatar’s virtual joints co-locate with the user’s real-word body joints.

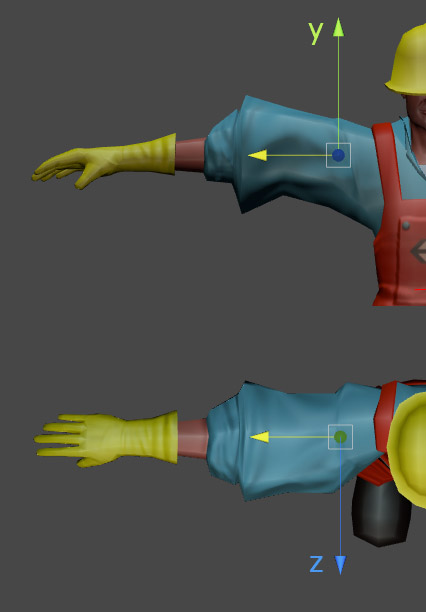

There are two different approaches to co-locating avatar’s joints with those of the user: 1) Translation offset, which tends to cause self-intersection in the skinned mesh when the virtual bone is shortened, ruling out this approach. 2) Uniformly scaling the bone, which causes irregularity in the body segment thickness whenever the avatar’s relative body segment proportions differ from those of the user. In RUIS for Unity body segment thickness can be made to persist via non-uniform scaling, but this has side-effects: rotation and scaling of bones require additional correction terms, and skew is introduced in mesh portions whose parent joints (transforms) have non-uniform scaling.

A simple addition to the skinning system fixes the aforementioned issues, and makes avatar modification easier without having to create custom thickness blendshapes: each bone/joint is associated with a thickness multiplier (variable) and a median thickness (read-only value) within the skinning system. By default each bone’s thickness multiplier is 1, and this value affects the vertices’ radial distance from the bone axis; smaller values pull the associated vertices perpendicularly towards the bone axis and bigger values push them away. This allows modifying body segment thickness without affecting its length. The avatar’s bone lengths can then be made to match user’s body proportions using uniform scaling, while preserving body segment thickness by changing the bone thickness multipliers in conjunction; for example the avatar’s forearm and upper arm can have the same thickness even when their lengths are modified to match user’s proportions.

The read-only median thickness value for each bone exists so that the thickness of a body segment can be estimated in real-world units. The median thickness is calculated as the median distance from the bone axis to those vertices that are associated with the bone in question. The length and thickness of each body segment can be independently modified in real-world units, by simultaneously adjusting the thickness multiplier and uniform scaling, while taking the median thickness into account in the calculations.

The scheme described above is an addition to the underlying skinning system, and it does not care about whether utilizing linear blend skinning (used in most game engines), dual quaternion skinning, or something more exotic. Perhaps the most important implementation detail is how the bone axis is defined: the line that intersects the bone’s two end-point joints seems like the most natural candidate, but the edge-case of zero-length bones needs to be handled. Furthermore, if a vertex is affected by multiple non-zero bone weights, then that vertex’s thickness offsets should be cumulative and affected by the bone weights.

Affecting body segment thickness with just one thickness multiplier variable generally works best when the bone is inside its mesh portion volume, and that volume is somewhat uniformly distributed around the bone axis. More granularity could be added by having two thickness axes that are perpendicular to the bone axis. This would be useful if one wanted to make the avatar’s chest or belly thicker without simultaneously increasing its width. In the case of limbs such granularity is rarely needed; for example one usually wants to make legs uniformly thinner, not just laterally thinner.

All in all, blendshapes offer visually the best results when it comes to avatar modification, although creating thickness blendshapes where specific values are connected to body segment thickness in real-world units requires extra work. Unfortunately creating good blendshapes takes skill and effort, and most 3D characters found online lack blendshapes altogether. Until standardized blendshapes can be created automatically for arbitrary humanoid 3D models with a push of a button, the scheme presented here offers a simple, standardized approach to modifying arbitrary VR avatars.

[Update 20.7.2018] A more elaborate customization of arbitrary humanoid avatars could be achieved by mapping each avatar model automatically to a customizable template humanoid model (e.g. MakeHuman), so that parametric modification of the latter affects the former. Tesselation or even automatic retopologization of the original avatar model might be required. This approach would allow customization at the level of individual muscles or even facial features, and the use of built-in blendshapes of the template model to control perceived body fat and muscularity. Obviously such mapping is difficult to obtain automatically for those parts of the avatar model that have elaborate clothing, armor, or other accessories.

Facilitating lurkers in online VR worlds

Not everyone wants to participate in online VR worlds with an avatar: some will prefer to preview the VR world using a standard 2D monitor before account registration, while others will just want to ‘lurk’ without being seen.

A lurker in the context of online VR worlds is someone whose presence can’t be directly observed by other users; essentially, a lurker is without an avatar or voice. In online gaming these people are commonly referred to as spectators.

Once online VR worlds get interesting enough, people will want to lurk there for the same reason that lurking happens in message boards: for the passive consumption of good content (e.g. when you are simultaneously doing something else like eating or watching TV). Furthermore, when it comes to motion tracked avatars, interaction can be more exhausting and demanding when compared to text or voice-based online communication. Lurking is also a viable option for more shy users, who prefer the comfort that invisibility can bring.

Conversely, some avatar users will want to be shielded from lurkers. This could be achieved via a user preference for becoming invisible to lurkers or by making certain areas off limits to lurking.

Lurkers could be given an option to bring in their avatar at any time, so that they can start interacting when they are ready. Optionally, lurkers could participate by making comments or voice messages that are visible/audible to avatar users and other lurkers (when cheering, making suggestions, etc). Again, users should be able to choose whether they want to be exposed to lurker comments at all, or only to comments from lurkers that have a certain rating.

AR headset that enables seamless switching between VR development and testing

VR developers could wear AR headsets while developing, because wearing them does not prevent the use of a standard development interface (monitor, keyboard, and mouse). When the developed VR application is tested, the AR headset would be used to display the VR content. Thus there would be no need to spend time on putting on a VR headset (or alternatively flipping down its display).

This reduces the effects of “context switch” that occurs between each iteration of development and testing cycle. This is relevant, because constant testing and re-implementation is the second-most severe issue for VR developers according to the data (ratings from 132 VR developers) collected during my PhD research.

The AR headset would be primarily intended for testing the VR application’s user interface and interactive features. Color balance and other graphics related matters should still be tested with the targeted VR headsets, because optical see-through AR headsets obviously can’t block light, and video see-through has its own issues. Each major consumer VR headset could have a developer-only AR headset equivalent that utilizes the same tracking method, so that testing with the AR headset would be as close as possible to testing with the consumer VR headset.

This idea came to me while I was thinking of new ways to alleviate the issues in VR development in preparation of my PhD defense.

Killdozer, a sitting VR Experience based on a true story

While pondering about sitting VR and how enclosed virtual spaces seem like a natural fit for such experiences, I happened to remember the story of Colorado resident Marvin Heemeyer and his 2004 Killdozer rampage:

Heemeyer has become an underground hero to some, representing a vigilante who stood up to “the man”. His story contains many thematic elements that would work well in a game: injustice, vigilantism, running amok, and the power fantasy associated with the unstoppable machine, built by one man. In some sense Heeymeyer is a working class’ Tony Stark, Batman, and the Punisher all rolled into one.

As far as game mechanics are concerned, there could be at least two phases: building and designing a Killdozer, and then rampaging with it. The game could be mindless, GTA-style destruction derby, or involve more introspection on what it’s like to be on a last journey in the belly of a beast of your own making. Surreal elements could be easily added to the events of the game.

Heemeyer spent 18 months upgrading his 50 ton bulldozer to a tank; he used concrete and metal to build an impenetrable enclosure equipped with 3 rifles. Upon lowering the protective concrete hull on top of his bulldozer cabin there was no way out; he became one with the Killdozer, video monitors hooked onto external surveillance cameras being his only view outside. Heemeyer then went on a rampage in his hometown, destroying property of those whom he held grudges against: city officials, businesses, and the police.

"Sometimes reasonable men must do unreasonable things."

- quote from one of the notes left by Marvin Heemeyer.

Nothing could stop the Killdozer’s rampage: the local police, state troopers, and a SWAT team fired the bulldozer over 200 times, planted explosives on it, and dropped a flash-bang grenade in its exhaust pipe. A heavy wheel tractor-scraper was sent to face off the Killdozer, only to be humiliated by it. Colorado Governor even considered authorizing the National Guard to use an Apache attack helicopter to stop the Killdozer with a Hellfire missile. In the end, after inflicting $7 million of property damages, the Killdozer got stuck in a building’s basement, and its engine finally gave up. Unable to move, Marvin Heemeyer’s two hour rampage was over and he ended his own life with a handgun.

No one was injured in the incident, and Heemeyer was the only casualty. Some witnesses reported that Heemeyer consciously avoided injuring humans, with the intention of causing only property damage. He used the rifles installed on the Killdozer to shoot propane tanks and transformers. Other accounts tell that Heemeyer fired his rifles at state troopers and the wheel tractor-scraper driver.

Another incident resembling the Killdozer-case, involved a M60A3 Patton tank, and likely inspired GTA games to include tanks.

VR system with optical full-body tracking

Having experience with combining VR headsets with full-body tracking, I’ve been anticipating for several years that consumer VR systems would start incorporating such technology. Immersion is greater in VR when your avatar mimics the pose of your body, and social VR also calls for full-body tracking. Therefore it is just a matter of time when real-time mocap technology will be integrated with home VR systems like Oculus Rift, Vive, and PSVR. I expect this to happen with the 2nd or 3rd generation of these devices.

At the moment most full-body tracking systems require wearing a mocap suit or individual markers. Unfortunately that is too much hassle for a consumer product, even if it were just three additional Lighthouse tracking pucks. A markerless, optical full-body tracking system like Kinect eliminates the need for additional wearables. Regrettably Kinect tracking has too much latency and it is not robust enough for VR. But a system like Kinect can be improved upon, when using more than one sensor and better algorithms.

In 2015 when I read about HTC Vive and played around with its development kit, I thought about how convenient it would be to incorporate depth cameras into the Lighthouse base stations. One could create a sensor fusion algorithm that combines data from the two depth cameras and the Lighthouse sensors: the poses of the VR headset and hand-held controller could be directly used to get avatar’s head and hand poses and to restrict the solution space for inferring the body pose from the depth camera data. In other words, the Lighthouse sensor data would act as a prior for the full-body tracking algorithm.

While this approach doesn’t require any additional base stations, it would necessitate connecting the base stations to the computer, or at the very least to each other. Furthermore, each depth camera would most likely require their own ASIC or FPGA to perform all of the depth image processing (Kinect v2 does some of this with GPU), and a custom chip might be needed to speed up the sensor fusion. All this adds to the cost of the base stations, which already is too high. Moreover, it would be ideal for achieving smooth tracking if the depth camera frame rate would be close to the VR headset frame rate (90-120). At the moment most depth cameras have a frame rate of 30 frames per second.

These high requirements for software, hardware, the fact that Microsoft has a huge portfolio of full-body tracking patents, and the limited profit expectations even in a good scenario have kept me from pursuing this idea further. Recent developments in full-body tracking with RGB-cameras make this idea appear much more feasible though.